It takes a lot of energy and water to develop the massive artificial intelligence (AI) models taking over the globe.

So much so that technology giants like Microsoft plan to restart nuclear plants to handle rising electricity costs.

Conventional thinking has been that creating the biggest and best new AI models needs a lot of hardware, which then requires a lot of juice.

But Chinese AI offering DeepSeek sunk that premise with the release of two models that rival the capabilities of industry leaders while using fewer resources.

“DeepSeek’s breakthrough could mark a shift in the AI arms race,” University of California AI sustainability researcher Shaolei Ren said.

“[It’s shifted] from model performance to a focus on resource efficiency probably sooner than anyone anticipated.”

The announcement wiped $US600 billion ($960 billion) off Nvidia, the company which creates up to 95 per cent of the world’s AI graphics processing units. Energy providers, data centre hosts and uranium mining companies took a hit too.

But more efficiency may not result in lower energy usage overall. AI watchers are concerned the innovations made by DeepSeek will only encourage greater development as it becomes more integrated into everyday computing.

How efficient is DeepSeek?

DeepSeek’s flagship AI program, called R1, can handle complex maths, science and coding problems.

It’s a type of large language model of AI that’s trained on enormous amounts of data.

According to DeepSeek, R1 was on par with OpenAI’s top-of-the-line o1 model but 25 times cheaper for consumers to use.

Both are considered “frontier” models, so on the cutting edge of AI development.

Unlike DeepSeek, OpenAI’s code for its new models is “closed”. This means it’s not open to the public to replicate or other companies to use.

There are other high-performing AI platforms, like Google’s Gemini 2.0, which are currently free to use.

But DeepSeek needs a lot less energy to meet the same output as other similar-performing models.

Training AI models currently sucks up a lot more energy in the sector than the electricity to use the finished product.

These energy requirements can be inferred by how much an AI model’s training costs.

DeepSeek’s R1 was based off its V3 model, which the company says cost $US5.6 million ($9 million) on its final training run, exclusive of development costs.

By comparison, Meta’s Llama 3.1 is thought to have cost about $US60 million ($96 million), using about 10 times the amount of computing required for V3.

Less computing time means less energy and less water to cool equipment.

University of Copenhagen computer scientist Raghavendra Selvan said DeepSeek, compared to Llama, was “easily an order of magnitude cheaper from an energy consumption point of view”.

But while DeepSeek has made energy efficiency gains, Dr Selvan doubted it would reduce the overall energy consumption of generative AI as a sector in the long run.

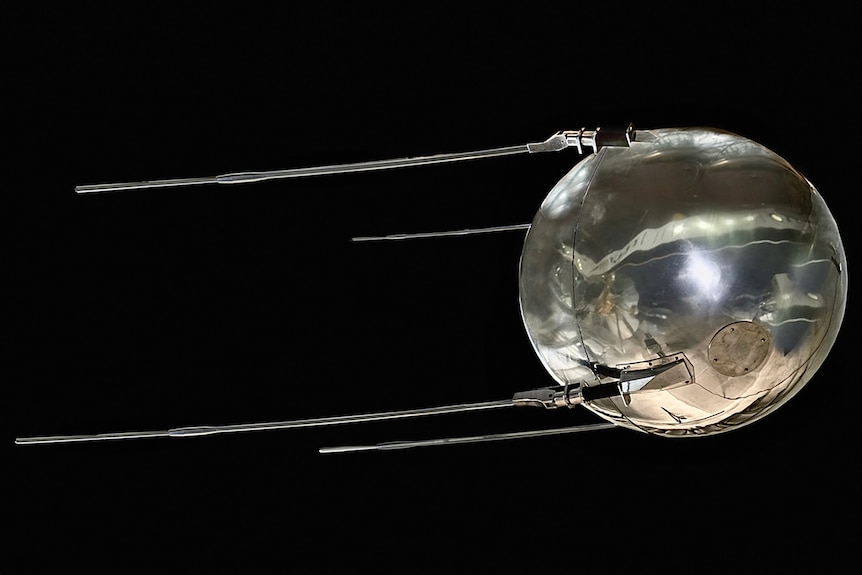

Is this AI’s Sputnik moment?

The launch of R1 has been likened to Russians launching the first satellite to orbit Earth, the Sputnik, and jolting the US into the space race.

A model of the Sputnik-1 which was the first satellite shot into space. (Wikimedia: Steve Jurvetson, Sputnik-1, CC BY 2.0)

But in the AI development race between the US and China, it’s like the latter achieved Sputnik and gave its blueprints to the world.

Major US players will now be able to look at DeepSeek’s code and incorporate efficiency improvements.

“The results from DeepSeek show that we can develop even larger models, on even larger datasets,” Dr Selvan said.

“Furthermore, it invites more people to develop these models — which in itself is good for democratising these technologies, but can have a net increase in total energy consumption.

“If these models get more capable, and we use them more, the energy consumption will also increase. The classic case of Jevons paradox.”

Economist William Stanley Jevons came up with a paradox of technology efficiencies being negated by a resulting increase in use of the same technology. (Wikimedia: University of Manchester Libraries, William Stanley Jevons, CC BY-SA 4.0)

English economist William Stanley Jevons first described the paradox in 1865 around the use of coal.

He suggested efficiencies in steam engines to burn less coal led to even more of the material being used as the technology became used across more industries due to these improvements.

University of Pennsylvania computer scientist Benjamin Lee said while training costs were falling, frontier models would still require lots of graphics processing units, large data centres and significant amounts of energy.

“These new capabilities, for example, might include multi-modal models that can handle different types of data, [such as] audio and video in addition to text,” he said.

A report last week from the RAND Corporation, a private policy think tank that gets funding from the US government, suggested the world could need 68GW of extra energy capacity by 2027 to deal with AI growth, and 327GW by 2030.

Comparatively, Australia’s energy capacity on the National Energy Market, which services all jurisdictions except Western Australia and the Northern Territory, is 65.5GW.

Even as training costs fall, Professor Lee said more data centres would be needed as humans and software agents send an increasing number of queries to trained models.

More use by consumers means more energy

Professor Lee said early indications were generative AI models were reasoning more — and increasing computer power and energy use — to get better results.

“If generative AI is deployed and adopted widely for compelling applications, [operating] energy will be the main driver and concern in the discussion of rapidly growing energy costs,” he said.

One of the main public-facing uses of AI has been the proliferation of chatbots, although it can be used for much more technical problems by businesses.

But AI is becoming more integrated into everyday computing life and search engines.

Google is believed to have about 90 per cent market share of search engines and each query was estimated, by the company in 2009, to use about 0.3 watt-hours of energy.

Light bulbs, depending on their efficiency, use about 5 to 100Wh.

OpenAI’s ChatGPT model was estimated by research company SemiAnalysis in 2023 to require around 2.9Wh per request, or 10 times as much energy as a Google search.

And Google searches powered by its AI model Gemini are estimated to use even more at 6.9 to 8.9Wh.

Gemini launched in the US last August with the target of reaching 1 billion people per month.

The energy costs could see a massive jump if AI is used across Google’s 2 trillion annual searches.

It will make the company’s plans to be 100-per-cent carbon-free by 2030 harder.

The company’s emissions have increased 48 per cent since 2019.

Last year, its greenhouse gas emissions increased by 13 per cent as it ramped up computer power to support the AI transition.

Google’s annual water usage also increased 17 per cent to 23 gigalitres.

Other large tech companies such as Meta and Microsoft have set similar targets, and face similar decarbonisation challenges.

RAND predicts a single training run of an AI model could require 1GW of capacity by 2028 and 8GW by 2030.

But breakthroughs in the energy efficiency of the graphics processing units themselves could reduce electricity requirements and a potential lack of data to train models could also slow growth, according to RAND’s report.

AI is also helping to optimise renewable energy technology such as solar and wind while also assisting analysis of energy networks and weather observations.

Can you opt out of AI (and reduce emissions)?

Unlike some of the other websites, you can’t disable the AI which automatically runs for Google searches.

Google does not currently let you disable the AI assistance for its searches. (Google)

Professor Lee said AI searches provided valuable data to Google during development to see if their models were effective.

He thought the use of AI for all search in the future might depend upon how expensive it becomes to run.

“Google might ask users to opt-in or subscribe for premium features,” Professor Lee said.

But Dr Selvan believes we may already be coming to a point past having opt-outs.

“I expect that generative AI models will become pervasive, and classic search as we know will get phased out in the coming years,” he said.

Dr Ren said not only should there be an opt-out option for users, but energy and water usage should be disclosed too.

“This level of disclosure and transparency would allow users to better understand the cost of their interactions and promote more sustainable AI usage,” he said.

Among open and closed AI models there is a lack of transparency around the resources they use in easy-to-understand terms for consumers.

Dr Selvan said there should be an industry standard to measure the environmental sustainability of the sector.

The International Organisation for Standardisation is due to issue a set of criteria for AI sustainability this year.

Energy and water consumption, carbon footprint, waste, system life cycles and supply chains are all set to be included.

But it will still be some time yet if companies adopt the principles and the true extent of sustainability issues is revealed — or if the Jevons paradox comes to pass.